Kubernetes (often abbreviated as K8s) is an open-source platform designed to automate deploying, scaling, and managing containerized applications. It’s often used with container technologies like Docker. It was originally developed by Google and is now maintained by the Cloud Native Computing Foundation (CNCF). Kubernetes manages clusters of containers across multiple machines, handles load balancing, scaling, and offers self-healing capabilities.

Key features

- Scheduling: Deciding which server or node will run each container.

- Scaling: Automatically adjusting the number of running containers based on application demand.

- Networking: Facilitating communication between containers and ensuring external traffic reaches the right services.

- Load Balancing: Distributes network traffic across multiple containers to ensure high availability and reliability.

- Self-Healing: Detecting and restarting failed containers, ensuring the system remains operational with minimal manual intervention.

- Storage Management: Kubernetes supports various storage solutions like local storage, cloud providers, and network storage systems.

- Service Discovery: Automatically assigns DNS names to containers, allowing applications to find and communicate with each other.

- Configuration Management: Allows you to manage configuration data and secrets outside the application container images.

Kubernetes can be run in different environments, from local development machines to public, private, or hybrid cloud infrastructures, and is a key component in modern DevOps practices.

Kubernetes Architecture

Kubernetes is made up of several key components that work together to manage containerized applications. The main parts of the kubernetes architecture are,

1. Master Node (Control Plane): The control plane manages the Kubernetes cluster. It is responsible for scheduling, scaling, and maintaining the desired state of the system.

- API Server: The central management entity that exposes Kubernetes' API. It’s the interface for users and administrators to interact with the Kubernetes cluster.

- Scheduler: Decides which nodes will run the containers based on resource requirements and other constraints.

- Controller Manager: Maintains the desired state by monitoring the cluster and making adjustments (e.g., restarting failed containers, scaling applications).

- etcd: A distributed key-value store that Kubernetes uses to store all cluster data (configuration, state, etc.).

2. Worker Nodes: These are the machines (virtual or physical) where the containers run.

- Kubelet: An agent that runs on each node. It ensures that containers are running in a pod and communicates with the API server to manage workloads.

- Kube-proxy: Manages network rules and routing to allow communication between pods and services.

- Container Runtime: The software responsible for running the containers (e.g., Docker, containerd, CRI-O).

Kubernetes Ecosystem

- Helm: A package manager for Kubernetes that allows you to define, install, and upgrade even the most complex Kubernetes applications using Helm charts (pre-configured Kubernetes resources).

- Prometheus: A widely-used monitoring tool in Kubernetes environments. It collects metrics from applications and cluster components, allowing for detailed insights into performance.

- Kustomize: A native Kubernetes configuration management tool that allows you to customize Kubernetes objects declaratively.

- Fluentd and ELK Stack: For log aggregation, Kubernetes often uses Fluentd, Logstash, Elasticsearch, and Kibana to collect, store, and visualize logs from distributed applications.

Example-1: We will use minikube to set up a simple local (single node) kubernetes cluster, deploy an application, and access it.

Minikube is a tool that allows you to run a single-node kubernetes cluster locally. It's excellent for testing Kubernetes applications without needing a full-blown multi-node cluster.

Step1: Install docker, minikube, kubectl

The Kubernetes command-line tool, kubectl, allows you to run commands against Kubernetes clusters. You can use kubectl to deploy applications, inspect and manage cluster resources, and view logs.

Step2: Start minikube (a local kubernetes cluster)

minikube start

Step3: Verify that minikube is running

minikube status

Step4: Deploy a simple NGINX server in the kubernetes cluster

kubectl create deployment nginx --image=nginx

Step5: Check the deployment

kubectl get deployments

Check configuration details of your cluster

kubectl config view

Step6: Expose the deployment to access the NGINX service

kubectl expose deployment nginx --type=NodePort --port=80

Step7: Get the URL of the service to access it from browser

minikube service nginx --url

Open the URL returned by the above command in your browser. You should see the NGINX welcome page.

Step8: You can access your kubernetes cluster dashboard (GUI) and see running pods and other various details of your cluster by firing the command

minikube stop

Step10: Clean up and delete the minikube cluster

minikube delete

Example-2: Create and deploy a docker containerized nodejs application to a local (single node) kubernetes cluster (minikube)

This example demonstrates how to deploy a simple dockerized node.js application to a kubernetes cluster

👉 You can deploy this docker containerized app into various other kubernetes platforms (such as AWS EKS) rather just this local kubernetes (minikube) by following same steps. Only step5 (where we start and use minikube) need to be replaced and start your kubernetes cluster service and deploy this app

cd my_workspace

Step1: Create a simple nodejs application

app.js

const app = express();

const port = 3000;

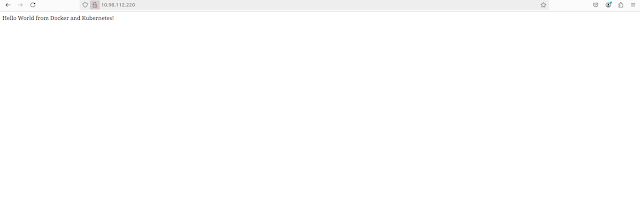

app.get('/', (req, res) => {

res.send('Hello World from Docker and Kubernetes!');

});

app.listen(port, () => {

console.log(`App listening on port ${port}`);

});

package.json

"name": "my-nodejs-app",

"version": "1.0.0",

"description": "",

"main": "app.js",

"scripts": {

"start": "node app.js"

},

"dependencies": {

"express": "^4.17.1"

}

}

dockerfile

FROM node:14

# Set the working directory

WORKDIR /app

# Copy package.json and install dependencies

COPY package.json /app

RUN npm install

# Copy the rest of the application files

COPY . /app

# Expose the port the app will run on

EXPOSE 3000

# Start the application

CMD ["npm", "start"]

Step3: Build and push docker image to docker hub

docker login

docker build -t sirajchaudhary/my-nodejs-app .

docker push sirajchaudhary/my-nodejs-app

Step4: Create kubernetes deployment and service files

deployment.yaml

kind: Deployment

metadata:

name: my-nodejs-app

spec:

replicas: 2

selector:

matchLabels:

app: my-nodejs-app

template:

metadata:

labels:

app: my-nodejs-app

spec:

containers:

- name: my-nodejs-app

image: sirajchaudhary/my-nodejs-app

ports:

- containerPort: 3000

service.yaml

kind: Service

metadata:

name: my-nodejs-app

spec:

selector:

app: my-nodejs-app

ports:

- protocol: TCP

port: 80

targetPort: 3000

type: LoadBalancer

Step5: Start minikube (a local kubernetes cluster)

Step6: Deploy to kubernetes. First apply the deployment then apply the service

kubectl apply -f deployment.yaml

kubectl apply -f service.yaml

Step7: Access the application. To get the external IP fire following command

Note: In a local Kubernetes setup like Minikube, services of type LoadBalancer don't automatically get external IP addresses because Minikube doesn't run in a cloud provider environment where a cloud load balancer is available. However, you can use minikube tunnel to simulate the behavior of a LoadBalancer service and assign an external IP to the service.

In a new terminal window run "minikube tunnel" and than run following command in another terminal window

kubectl get services

Once you get the external IP, you can access your app in browser by navigating to http://<external-ip>

Or run curl command

curl http://<external-ip>

Example-3: Here is an example setting up a multi-node kubernetes cluster using kubeadm, kubectl, and kubelet.

Example-4: Here is an example setting up a multi-node kubernetes cluster using AWS EKS.

👉 'kubectl' CLI tool: It is the primary command-line interface (CLI) tool for interacting with any Kubernetes cluster, regardless of whether it's a local cluster (like Minikube) or a remote one (in the cloud or on-prem). It allows you to run commands against Kubernetes clusters to manage applications, inspect and modify cluster resources, and troubleshoot issues.

Running Kubernetes in production environments involves configuring resources for high availability (HA), monitoring, security hardening, and disaster recovery. Many enterprise projects use managed Kubernetes services provided by cloud vendors like

- Amazon EKS (Elastic Kubernetes Service)

- Google GKE (Google Kubernetes Engine)

- Azure AKS (Azure Kubernetes Service)

- Overview: Docker Swarm is a native clustering and orchestration tool for Docker containers. It offers easy setup and integration with Docker environments.

- Advantages:

- Simplicity and ease of use.

- Tight integration with Docker.

- Less resource-intensive compared to Kubernetes.

- Use Cases: Small to medium-scale deployments or when a straightforward orchestration tool is needed.

- Overview: Nomad is a simple and flexible scheduler and orchestrator that supports containerized (e.g., Docker) and non-containerized workloads.

- Advantages:

- Lightweight and easy to set up.

- Can run different types of workloads, not limited to containers.

- Integrates well with HashiCorp's ecosystem (Consul for service discovery, Vault for secrets management).

- Use Cases: Organizations using the HashiCorp toolchain or seeking a lightweight alternative to Kubernetes.

- Overview: OpenShift is Red Hat’s enterprise Kubernetes distribution with additional features and tools for developers and enterprises.

- Advantages:

- Strong focus on enterprise features like security, CI/CD integration, and developer tools.

- Built on Kubernetes but provides a more comprehensive platform.

- Supports hybrid and multi-cloud environments.

- Use Cases: Enterprises looking for a more secure and supported Kubernetes distribution.

- Overview: Rancher is a complete container management platform for running Kubernetes clusters. It simplifies cluster management and provides tools to manage multi-cluster environments.

- Advantages:

- Simplifies Kubernetes cluster setup and management.

- Multi-cluster management.

- User-friendly UI for monitoring and management.

- Use Cases: Organizations managing multiple Kubernetes clusters or seeking a more user-friendly interface for Kubernetes.

- Overview: Apache Mesos is a distributed systems kernel that abstracts resources across machines. Marathon is a container orchestration framework for Mesos.

- Advantages:

- Can run containers and other distributed workloads.

- Highly scalable and designed for large, fault-tolerant systems.

- Use Cases: Large-scale systems with diverse workloads beyond containers.

- Overview: ECS is a fully managed container orchestration service provided by AWS.

- Advantages:

- Fully managed by AWS, less operational overhead.

- Deep integration with AWS services.

- Simpler than Kubernetes, with a focus on AWS ecosystems.

- Use Cases: AWS users who want to run containers without the complexity of Kubernetes.

- Overview: Google Cloud Run is a serverless platform that abstracts away infrastructure management for containers.

- Advantages:

- Serverless, meaning it automatically scales based on traffic.

- Fully managed by Google Cloud, minimal setup.

- Pay only for the resources consumed.

- Use Cases: Developers looking for a serverless platform to run containers without managing orchestration.

- Overview: ACI is Microsoft Azure’s service for running containers without orchestration.

- Advantages:

- No need for container orchestration.

- Quick setup and scalability.

- Easy integration with other Azure services.

- Use Cases: Quick, short-lived container tasks or when container orchestration is unnecessary.

- Overview: Cattle was the original orchestration engine of Rancher before it transitioned to Kubernetes.

- Advantages:

- Simple to manage and deploy.

- Suitable for smaller or simpler workloads.

- Use Cases: Small-scale deployments needing a basic orchestration solution.

- Overview: Flynn is an open-source Platform-as-a-Service (PaaS) that allows developers to deploy, run, and scale applications easily.

- Advantages:

- Full PaaS, handles databases, apps, and containers.

- Automatic scaling and load balancing.

- Use Cases: Teams looking for a PaaS with integrated orchestration.