Apache Kafka is a distributed streaming platform that is used for building real-time data pipelines and streaming applications. It is designed to handle high-throughput, fault-tolerant, and scalable messaging systems. It was originally developed at LinkedIn and later open-sourced through the Apache Software Foundation. Kafka is primarily used for three key functions:

Publish and Subscribe: Kafka allows applications to publish and subscribe to streams of records, which makes it similar to a message queue or enterprise messaging system.

Store Streams of Data: Kafka can store streams of records in a fault-tolerant, durable manner. The stored data can be persisted for a defined period, making it suitable for applications that need to process and analyze historical data as well as live data.

Process Streams: Kafka allows applications to process streams of data in real-time as they are produced. This is useful for real-time analytics, monitoring systems, and event-driven architectures.

Producers: Producers are applications that write data (records) to Kafka topics.

Consumers: Consumers are applications that read data from topics.

Topic: A topic is a category or feed name to which records are published. Each record in Kafka belongs to a topic.

Partition: A subdivision of a topic. Each partition is an ordered, immutable sequence of messages, which allows Kafka to scale horizontally.

Broker: A Kafka server that stores messages in topics and serves client requests. A Kafka cluster consists of multiple brokers.

Replication: The process of copying data across multiple brokers to ensure durability and availability. Each partition can have multiple replicas.

Leader and Follower: In a replicated partition, one broker acts as the leader (handling all reads and writes), while the others are followers (replicating data from the leader).

Offset: A unique identifier for each message within a partition, allowing consumers to track their progress.

Consumer Lag: The difference between the latest message offset in a topic and the offset of the last message processed by a consumer. It indicates how far a consumer is behind.

Schema Registry: A service for managing schemas used in Kafka messages, ensuring that producers and consumers agree on data formats. It supports Avro, Protobuf, and JSON formats and ensures that schema evolution is handled safely (e.g., forward and backward compatibility).

Kafka Connect: A framework for integrating Kafka with external systems (databases, file systems, cloud services, etc.). Kafka Connect provides source connectors (to pull data into Kafka) and sink connectors (to push data out of Kafka).

Kafka Streams: A client library for building real-time applications that process data stored in Kafka, allowing for transformations, aggregations, and more.

Topic Retention: The policy that dictates how long messages are kept in a topic. This can be based on time (e.g., retain messages for 7 days) or size (e.g., retain up to 1 GB of messages).

Transactional Messaging: A feature that allows for exactly-once processing semantics, enabling producers to send messages to multiple partitions atomically.

Log Compaction: A process that reduces the storage footprint of a topic by retaining only the most recent message for each key, useful for maintaining a state snapshot.

KSQL: KSQL is a SQL-like streaming engine for Apache Kafka, which allows you to query, manipulate, and aggregate data in Kafka topics using SQL commands.

Zookeeper: While not directly part of Kafka's core functionality, Zookeeper is used for managing cluster metadata, broker coordination, and leader election. Note that newer versions are moving towards removing Zookeeper dependency.

Durability: Kafka guarantees durability by writing data to disk and replicating it across multiple brokers. Even if some brokers fail, the data remains safe.

High Throughput: Kafka can handle a high volume of data with low latency. It achieves this by batching messages, storing them efficiently, and leveraging a zero-copy optimization in modern operating systems.

Fault Tolerance: Kafka replicates data across brokers, ensuring that if one broker fails, the data can still be read from another broker that holds the replica.

Scalability: Kafka’s partition-based architecture allows horizontal scaling. You can add more brokers to the cluster, and Kafka will redistribute data to ensure balance.

Retention: Kafka allows for configuring the retention policy of messages. You can store messages indefinitely or delete them after a certain period or when the log reaches a specific size. This makes Kafka flexible for different use cases, whether you need short-term processing or long-term storage.

- Real-Time Analytics: Kafka is widely used in big data environments where companies want to process massive streams of events in real time. For example, LinkedIn uses Kafka for tracking activity data and operational metrics, feeding into both batch and stream processing systems.

- Log Aggregation: Kafka can aggregate logs from multiple services or applications, making it easier to analyze them or store them for future reference. This is useful for monitoring, diagnostics, and troubleshooting.

- Event Sourcing: Kafka is often used in event-driven architectures, where systems communicate by publishing events to Kafka topics. Consumers can process these events in real-time or later, enabling systems to handle complex workflows and state changes.

- Messaging System: Kafka can replace traditional message brokers like RabbitMQ or ActiveMQ, especially when dealing with high-throughput messaging needs.

- Data Pipelines: Kafka serves as a backbone for large-scale data pipelines, allowing the integration of data across multiple systems, such as databases, analytics platforms, and machine learning systems.

- LinkedIn (where Kafka was originally developed)

- Netflix (for real-time monitoring and analytics)

- Uber (for geospatial tracking and event-based communication)

- Airbnb (for real-time data flow management)

- Twitter (for its log aggregation and stream processing systems)

- Java: Kafka runs on JVM, so ensure that Java is installed.

- Zookeeper: Kafka uses Zookeeper to manage brokers, topics, and other cluster-related metadata. Zookeeper comes bundled with Kafka.

- Configure Kafka for production: You’ll need to modify the server.properties file (e.g. set broker ID, configure log retention, optimize replication, etc.).

- Monitoring and logging: Set up metrics and logging tools like Prometheus, Grafana, or Kafka’s own JMX monitoring.

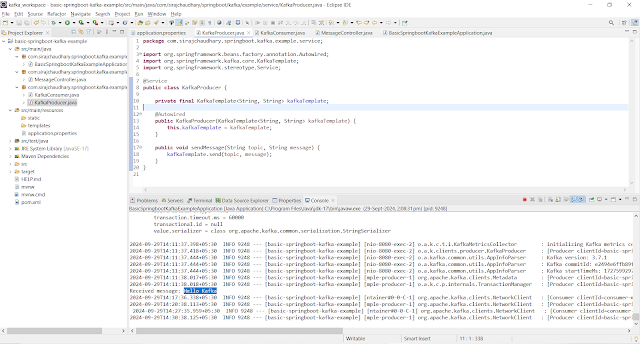

- Spring Web

- Spring for Apache Kafka