GitHub Copilot is an AI-powered code completion tool developed by GitHub in collaboration with OpenAI. It helps developers by suggesting code snippets, entire functions, and even generating documentation as they type, improving coding efficiency. Here's how it works and what it offers:

Code Suggestions

- GitHub Copilot can suggest entire lines or blocks of code based on the context of your current coding task.

- It learns from the code you write and adjusts its suggestions accordingly.

Supports Multiple Languages

- Copilot supports a wide range of programming languages including Python, JavaScript, TypeScript, Ruby, Go, Java, C#, and more.

- It works across multiple frameworks and libraries.

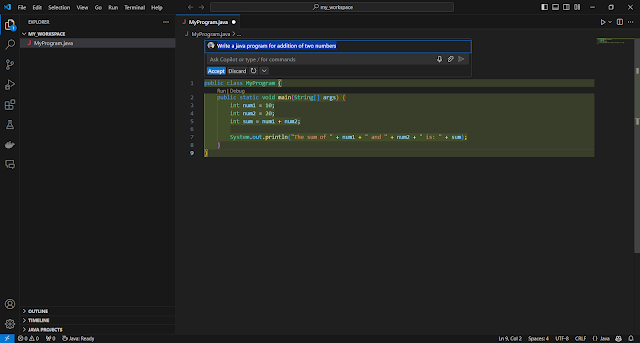

Contextual Awareness

- It understands comments and context within the code. If you describe a function in a comment, Copilot can generate a full implementation.

- It’s capable of interpreting comments, variables, and even importing necessary modules automatically.

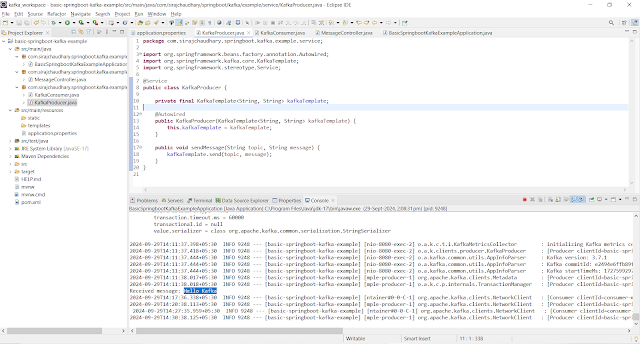

Integrated in Development Environments

- GitHub Copilot is available as an extension for Visual Studio Code (VS Code), making it easy to integrate into your existing workflow.

- It also works in other IDEs like JetBrains.

Learning from Open Source Code

- Copilot is trained on a vast amount of publicly available open-source code, helping it suggest relevant code patterns and solutions.

Limitations

- It doesn’t always generate perfect code, and in some cases, suggestions might need refinement.

- It can sometimes suggest code snippets that may have security vulnerabilities or outdated patterns, so developers need to verify the suggestions.

- Copilot doesn't have awareness of private code unless specifically trained or given access to it, so it’s privacy-conscious.

Ethical Considerations

- Since Copilot is trained on public repositories, some concerns have been raised about licensing, particularly whether the code snippets it generates might unintentionally include license-protected code.

How to Use GitHub Copilot

Installation

- You can install GitHub Copilot as an extension in Visual Studio Code by searching for "GitHub Copilot" in the Extensions marketplace.

Subscription

- As of 2023, GitHub Copilot requires a subscription, though it provides a free trial for users to test its features.

Workflow

- After installation, as you code in supported languages, Copilot will start suggesting code in real-time.

- You can accept suggestions by pressing

Tab, or cycle through multiple suggestions with keyboard shortcuts.

- Open VS Code.

- Go to the Extensions panel (on the sidebar or Ctrl+Shift+X).

- Search for "GitHub Copilot" and click Install.

- Search for "GitHub Copilot Chat" and click Install.

- After installing the extension, you'll be prompted to sign in to your GitHub account.

- Make sure your account has access to GitHub Copilot (it requires a paid subscription or a free trial).

- Create a new file with an appropriate file extension (e.g. .js, .py, .java).

Please note while using first time, It will suggest you to install few essential plugins based on file you create (e.g. .java), which you should install.

GitHub Copilot vs GitHub Copilot Chat

- Copilot provides real-time code suggestions to speed up your workflow, while Copilot Chat offers deeper interactions like answering specific questions, helping with debugging, and explaining code when needed.

- You can think of Copilot as a passive code-writing assistant and Copilot Chat as a more active, conversational partner.